Autoencoders and their applications in bioinformatics

Objectives: Gain basic knowledge of autoencoders.

Introduction to Autoencoders

What are Autoencoders?

Autoencoders are neural networks used for unsupervised learning. They are called autoencoders because they are trained to reconstruct their input data or "encode" it and then "decode" it back to its original form.

Autoencoders consist of two main parts: 1. an encoder and 2. a decoder. The purpose of the encoder is to map the input data to a lower-dimensional representation or latent space, and the decoder maps the latent representation back to the original data space.

During training, the autoencoder is given a set of input data and is trained to reconstruct the input data as closely as possible. In other words, we train the encoder and decoder to minimize the reconstruction error between the input and reconstructed data.

One of the primary purposes of autoencoders is to learn a compact, efficient representation of the input data. They can be helpful in various applications, such as data compression, feature extraction, and dimensionality reduction.

Autoencoders can also be used for generative modeling. We can train the autoencoder to reconstruct data from a randomly generated latent space, allowing the autoencoder to learn the input data distribution and generate new data samples similar to the training data.

There are several types of autoencoders, including vanilla autoencoders, denoising autoencoders, and variational autoencoders. Each type has its unique characteristics and is helpful for different tasks. In short, autoencoders are neural networks trained to reconstruct their input data.

How do Autoencoders work?

Autoencoders learn a compact, efficient representation of the input data in a latent space and use this latent space (compressed data) representation to reconstruct the input data as accurately as possible. The encoder and decoder are trained together to minimize the reconstruction error, and the process is repeated for multiple epochs until the reconstruction error reaches a satisfactory level.

The architecture of an autoencoder consists of two main elements: an encoder and a decoder. The encoder maps the data from the input to a lower-dimensional representation or latent space, and the decoder maps the latent representation back to the original data space.

The encoder and decoder are typically implemented as neural networks, with the encoder mapping the input data to the latent space using a series of linear and nonlinear transformations and the decoder mapping the latent representation back to the original data space using a similar series of transformations.

During training, the autoencoder is given a set of input data and is trained to reconstruct the input data as closely as possible. The encoder and decoder are trained to minimize the reconstruction error between the input and reconstructed data.

The process of training an autoencoder can be broken down into the following steps:

- The input data is passed through the encoder, which maps it to the latent space using a series of linear and nonlinear transformations.

- The latent representation is passed through the decoder, which maps it back to the original data space using a series of linear and nonlinear transformations.

- The reconstruction error is calculated as the difference between the input and reconstructed data.

- The weights and biases of the encoder and decoder are updated using an optimization algorithm, such as stochastic gradient descent, to minimize the reconstruction error.

- The process is repeated for multiple epochs until the reconstruction error reaches a satisfactory level.

Once the autoencoder has been trained, we can use it to reconstruct new data by passing it through the encoder and decoder in the same way as during training.

Types of Autoencoders

Autoencoders are a neural network architecture we can use for unsupervised learning. They are composed of two main parts: an encoder and a decoder. The encoder takes in the input data and learns a compact representation of it, known as the latent representation or encoding. The decoder takes this latent representation as input and generates an output that reconstructs the original input.

There are several types of autoencoder architectures, which can be primarily classified into the following categories:

- Vanilla autoencoder:

- This is the simplest form of autoencoder, where the encoder and decoder are both feedforward neural networks. It consists of an input, an output, and one or more hidden layers in between.

- Convolutional autoencoder:

- This autoencoder type is used to learn from data with a grid-like topology, such as images. It uses convolutional layers in the encoder and decoder, which are well-suited for learning spatial relationships in the input data.

- Denoising autoencoder:

- This autoencoder is trained to reconstruct the original input from a corrupted version. The corruption process can be random noise added to the input or occlusion of a portion of the input. The goal is to learn a robust representation of the input invariant to noise or occlusion.

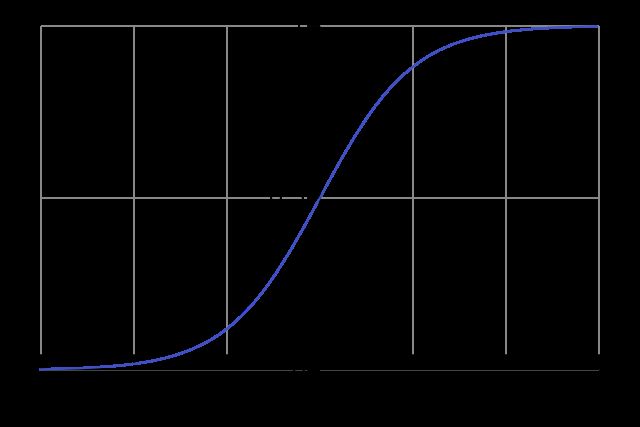

- Variational autoencoder (VAE):

- This probabilistic autoencoder learns a distribution over the latent representation rather than a deterministic encoding. It consists of a pair of encoder and decoder networks and an additional loss term that encourages the latent representation to be close to a prior distribution, such as a standard normal distribution.

- Deep autoencoder:

- This is a multi-layer autoencoder, with more than one hidden layer in the encoder and decoder. It allows for learning more complex representations of the input data but may be more prone to overfitting than autoencoders with a single hidden layer.

- Recurrent autoencoder:

- This type of autoencoder uses recurrent neural network (RNN) layers in the encoder and decoder, allowing it to process sequential data such as time series or natural language.

- Convolutional-recurrent autoencoder:

- This combines a convolutional autoencoder and a recurrent autoencoder, allowing it to learn features from both spatial and temporal relationships in the input data.

- Sparse autoencoder:

- This type of autoencoder is trained to have many units in the hidden layer, but only a small number are activated at any given time, thereby encouraging learning a compact and informative representation of the input data.

Applications of Autoencoders in Bioinformatics

Dimensionality Reduction

With dimensionality reduction, we mean the process of reducing the number of dimensions (or features) in a dataset while retaining as much of the original information as possible. Dimensionality reduction is often helpful for visualization, data compression, and feature selection.

Autoencoders are used for dimensionality reduction by learning a function that maps the input data to a lower-dimensional latent space and reconstructs the original data from the latent space. The encoder can reduce the dimensionality of new data points, and the decoder can reconstruct the original data from the latent representation.

In an autoencoder, the encoder part maps the input data to a lower-dimensional representation or latent space, and the decoder maps the latent representation back to the original space. The autoencoder aims to learn a function that can reconstruct the original input from the latent representation as accurately as possible.

To train an autoencoder for dimensionality reduction, we first feed the input data into the encoder, which maps it to the latent space. We then feed the latent representation into the decoder, which tries to reconstruct the original input. The difference between the original and reconstructed input is then computed to compute a loss. The acquired loss is subsequently used to update the weights of the encoder and decoder via backpropagation.

We can use several variations of autoencoders for dimensionality reduction, such as convolutional autoencoders and denoising autoencoders.

Convolutional autoencoders are particularly well-suited for image data, as they can learn locally correlated features and preserve the input's spatial structure.

On the other hand, denoising autoencoders are designed to reconstruct a clean version of the input from a noisy version, which can be useful for removing noise or corruption from the data.

Once the autoencoder has been trained, we can use the encoder to map new data points to the latent space, effectively reducing the dimensionality of the data. We can then use The decoder to reconstruct the original data from the latent representation, although this is not typically necessary for dimensionality reduction tasks.

Data Denoising

Autoencoders are used for data denoising by adding noise to the input data, encoding it to a latent space, and then reconstructing the original input using a decoder. The autoencoder is trained to minimize a reconstruction loss function, which encourages it to learn a representation of the input that can remove the noise effectively.

Autoencoders are commonly used for data denoising. They consist of both an encoder and a decoder, both neural networks. The encoder takes an input and maps it to a lower-dimensional representation known as the latent space. The decoder then takes this latent representation and reconstructs the original input.

We first add noise to the input data to use an autoencoder for data denoising. The noise could be any noise, such as Gaussian noise, salt and pepper noise, or any other type we want to remove from the data. We then feed the noisy input data into the encoder, which maps it to the latent space. The decoder then takes this latent representation and reconstructs the original input, hopefully with the noise removed.

To train the autoencoder for data denoising, we can use a reconstruction loss function such as mean squared error (MSE) or mean absolute error (MAE). This loss function measures the difference between the reconstructed and the original, clean input. The autoencoder is trained to minimize this loss function, which encourages the encoder and decoder to learn a representation of the input that can remove the noise effectively.

One of the advantages of using autoencoders for data denoising is that they can learn to remove various types of noise from the data as long as the noise is consistent across the training data. In other words, the autoencoder can generalize to new data that contains the same type of noise, even if it has not seen this particular noise before.

Anomaly Detection

It is worth noting that autoencoders are not the only method we can use for anomaly detection, and they may only sometimes be the best choice depending on the specific problem at hand. However, they can be a valuable tool for detecting anomalies in many data types, including images, time series, and text.

An autoencoder consists of two main components: an encoder and a decoder. The encoder takes in an input, typically an image or a time series, and learns a compact representation of the input, often referred to as the latent representation or the bottleneck. The decoder takes in this latent representation and tries to reconstruct the original input as closely as possible.

In anomaly detection, autoencoders can identify abnormal or unusual patterns in the data. The idea is to train the autoencoder on a typical dataset and then use it to detect anomalies in new, unseen data.

Here's how it works:

- First, the autoencoder is trained on a typical dataset. During training, the encoder learns to compress the input data into a latent representation, and the decoder learns to reconstruct the original input from this latent representation.

- After training, the autoencoder can compress and reconstruct new data. If the reconstructed data is significantly different from the original input data, the new data likely contains an anomaly.

- We can use a reconstruction error to quantify the difference between the original input and the reconstructed data. This error is simply the difference between the original input and the reconstructed data, measured using a metric such as mean squared error (MSE) or mean absolute error (MAE). A higher reconstruction error indicates a larger difference between the original and reconstructed data and, therefore, a higher likelihood of an anomaly.

- A threshold can be set to determine whether a given reconstruction error is considered anomalous. We regard a reconstruction error above this threshold as an anomaly, and a reconstruction error below this threshold is considered normal.

- Alternatively, we can use the reconstruction error to calculate the probability of anomaly. For example, a Gaussian distribution can fit the reconstruction errors of the normal data, and the probability of anomaly can be calculated based on the distance of the new data's reconstruction error from the mean of the Gaussian distribution.

Data Generation

To use autoencoders for data generation, we can train an autoencoder on a dataset and then use the decoder part of the network to generate new data samples. The process works as follows:

- First, we need to train an autoencoder on a dataset by feeding the training data through the network and adjusting the network weights so that the decoder output is as close as possible to the original input.

- Once the autoencoder is trained, we can use the encoder part of the network to compress the data into a lower-dimensional representation and the decoder part of the network to reconstruct the data from the compressed representation.

- To generate new data, we can feed random noise into the encoder part of the network, which will compress it into a lower-dimensional representation. We can then feed this compressed representation into the decoder part of the network, which will reconstruct it into a new data sample.

One benefit of using autoencoders for data generation is that they can learn the underlying structure of the data, which can help generate samples similar to the training data.

However, autoencoders are only sometimes the best choice for data generation. They can be prone to reconstructing data samples that are too similar to the training data rather than generating entirely new and diverse samples.

Building an Autoencoder in Python

Preprocessing the Data

Defining the Model Architecture

Training the Model

Evaluating the Model Performance

-

Advanced Techniques for Autoencoder Training

Overfitting and Underfitting

Overfitting occurs when a model is trained too closely on the training data, resulting in poor generalization to new, unseen data.

In the case of autoencoders, overfitting can occur when the model can reconstruct the training data perfectly but cannot reconstruct new data samples accurately. Overfitting can happen if the model is too complex or if the training data is not representative of the data on which we use the model.

On the other hand, underfitting occurs when the model cannot accurately reconstruct the training data because it needs to be more complex or because it has not been trained long enough. These issues can lead to poor performance on the training data and poor generalization of new data.

When training autoencoders, we can use several advanced techniques to prevent overfitting and underfitting. Some of these techniques include:

- Regularization:

- Regularization is a method of adding constraints to the model to prevent it from becoming too complex. In the case of autoencoders, regularization can be applied to the weights of the network to avoid overfitting.

- Early stopping:

- Early stopping is a method of interrupting the training process before the model becomes overfitted by monitoring the model's performance on a validation set and stopping the training process when the performance on the validation set starts to degrade.

- Dropout:

- Dropout is a technique that randomly drops out a certain percentage of neurons during training, which helps to prevent overfitting by preventing the model from relying too heavily on any single feature.

- Data augmentation:

- Data augmentation is a technique that involves generating new training examples by applying transformations to the existing training data. Augmentation can prevent overfitting by increasing the diversity of the training data.

- Transfer learning:

- Transfer learning is a technique that involves using a pre-trained model as a starting point for training a new model. The transfer learning technique can be helpful in the case of autoencoders, as it can help to prevent overfitting by using the knowledge learned by the pre-trained model to initialize the weights of the new model.

Regularization Techniques

Regularization is a method of adding constraints to a machine-learning model to prevent it from becoming too complex and overfitting the training data.

We can use several regularization techniques when training autoencoders to avoid overfitting and to improve generalization to new data.

- Weight regularization:

- Weight regularization involves adding a penalty term to the loss function based on the magnitude of the weights in the model. The usage of penalty terms can help prevent overfitting by constraining the model to use only a limited number of features and preventing the model from relying too heavily on any single feature.

- Sparse autoencoder:

- A sparse autoencoder is designed to reconstruct the input data using only a small number of hidden units. The small number of hidden units can prevent overfitting by forcing the model to learn a more compact and parsimonious representation of the data.

- Denoising autoencoder:

- A denoising autoencoder is trained to reconstruct the original input data from a corrupted input version. Denoising can help prevent overfitting by forcing the model to learn a more robust representation of the data that can tolerate noise and corruption.

- Variational autoencoder:

- A variational autoencoder is a type of autoencoder trained to learn a probability distribution over the input data rather than reconstructing the input data directly, which can prevent overfitting by introducing a probabilistic interpretation of the data, which can be helpful in data generation and anomaly detection tasks.

By using these regularization techniques, it is possible to train more robust and generalizable autoencoders that can handle new, unseen data better.

Hyperparameter Optimization

When we do hyperparameter optimization, we find the optimal values for the hyperparameters of a machine learning model. In autoencoder training, we use hyperparameter optimization to find the optimal values for the hyperparameters of the autoencoder, such as the size of the hidden layers, the learning rate, and the number of epochs.

Several approaches to hyperparameter optimization include manual tuning, grid search, and random search.

Manual tuning involves selecting different values for the hyperparameters and evaluating the model's performance on a validation set to determine the optimal values. This approach can be time-consuming and may not necessarily find the optimal values.

Grid search involves defining a grid of hyperparameter values and evaluating the model's performance for each combination of values. This approach is more thorough than manual tuning but can be computationally expensive if the grid is large.

The random search involves random sampling combinations of hyperparameter values and evaluating the model's performance. This approach is less computationally expensive than grid search, but it may take longer to find the optimal values.

Another approach to hyperparameter optimization is Bayesian optimization, which uses a probabilistic model to guide the search for optimal values. Bayesian optimization can be more efficient than grid search or random search, but it can be more complex to implement.

Regardless of the approach used, it is essential to use a validation set to evaluate the model's performance and ensure that the hyperparameters are not overfitted to the training data.

Real-World Applications of Autoencoders in Bioinformatics

Gene Expression Analysis

Gene expression analysis is the process of studying the activity of genes in a cell or tissue by measuring the levels of gene products, such as mRNA or proteins, present in a sample. This type of analysis can provide insights into the function of genes and how they contribute to the characteristics and behavior of an organism.

Several techniques can be used for gene expression analysis, including microarray analysis, quantitative polymerase chain reaction (qPCR), and RNA sequencing. These techniques allow researchers to measure mRNA levels (a molecule produced when a gene is transcribed) in a cell or tissue, which scientists can use to infer the corresponding protein levels.

We can apply gene expression analysis in a variety of real-world applications, including the identification of potential drug targets, the study of disease mechanisms, and the development of personalized medicine.

For example, by studying the gene expression patterns in cancer cells, researchers can identify genes that are overexpressed (expressed at higher levels than normal) or underexpressed (expressed at lower levels than normal). These genes may be good candidates for targeted therapies, as inhibiting the expression of overexpressed genes or increasing the expression of underexpressed genes may help to halt the progression of cancer.

Autoencoders can be used to analyze gene expression data in several ways. Autoencoders are a machine learning model that can learn to compress and reconstruct input data, such as gene expression data, by learning to encode the input data into a lower-dimensional representation (the encoding) and then decode the encoding back into a reconstruction of the original input data.

One application of autoencoders in gene expression analysis is to use them to identify patterns and relationships in the data that may not be immediately apparent to humans.

For example, an autoencoder trained on gene expression data from cancer cells may be able to identify patterns in the data indicative of the presence of cancer or the likelihood of a positive outcome following treatment.

Another application of autoencoders in gene expression analysis is to use them to impute missing data. Gene expression data can often be noisy or incomplete, with some genes missing expression values in specific samples. An autoencoder trained on a large dataset of gene expression data can learn to predict missing expression values based on the values of other genes in the same sample.

Finally, autoencoders can extract useful features from gene expression data that can be used as input to other machine learning models. For example, an autoencoder trained on gene expression data from a particular tissue or cell type may be able to identify a smaller set of genes that are most important for distinguishing that tissue or cell type from others.

This extracted set of genes could then be used as input to a classifier trained to predict the tissue or cell type of a new sample based on its gene expression data.

Autoencoders in gene expression analysis can help researchers better understand the underlying mechanisms and relationships in the data and aid in the development of more effective diagnostic and treatment approaches for diseases such as cancer.

Protein Structure Prediction

The structures of proteins are essential for understanding their function. Protein structure prediction is the computational prediction of the 3D structures of proteins from their amino acid sequences. It is a challenging problem in bioinformatics because proteins are complex molecules that perform various functions in living organisms.

Several approaches to protein structure prediction include experimental techniques like X-ray crystallography and nuclear magnetic resonance (NMR) spectroscopy and computational methods like homology modeling, threading, and de novo structure prediction.

One approach to protein structure prediction that has gained attention in recent years is using autoencoders.

Autoencoders are trained to reconstruct an input from that input's compressed representation or encoding. In protein structure prediction, autoencoders can be used to learn the relationships between the protein's amino acid sequence and its three-dimensional structure.

There are several real-world applications of autoencoders in bioinformatics for protein structure prediction. For example, autoencoders have been used to predict the 3D structure of proteins from their amino acid sequence, improve homology modeling accuracy, and identify novel protein folds.

Autoencoders have several advantages for protein structure prediction. They can handle large amounts of data and learn complex relationships between the amino acid sequence and the three-dimensional structure of proteins. They also can handle missing or incomplete data, which is often the case in protein structure prediction.

However, autoencoders also have some limitations for protein structure prediction. One limitation is that they can be sensitive to the quality and quantity of the training data and may not generalize well to proteins with novel folds or structures. Additionally, autoencoders can be computationally intensive, which can be a challenge for large-scale protein structure prediction.

Drug Discovery

Drug discovery is identifying and developing new drugs to treat various diseases. It is a complex and multi-step process involving several stages, including target identification, validation, lead generation, lead optimization, and preclinical and clinical testing.

In real-world applications of autoencoders in bioinformatics, autoencoders can assist in drug discovery by analyzing large datasets of biological and chemical information, such as gene expression, protein structure, and chemical compound data. Autoencoders are effective at identifying patterns and relationships in large, complex datasets.

One way autoencoders can be used in drug discovery is by identifying potential drug targets. A drug target is a specific protein or enzyme in the body that is believed to play a role in developing or progressing a particular disease. Autoencoders can be trained on large datasets of genetic and proteomic data to identify potential drug targets based on the presence or absence of specific proteins or genes associated with the disease.

Another way in which autoencoders can be used in drug discovery is by generating and optimizing potential drug candidates. Once potential drug targets have been identified, the next step is to identify and optimize small molecules or compounds that can bind to and inhibit the function of those targets.

Autoencoders can analyze chemical compound data to identify potential drug candidates based on their structural and chemical properties. They can also be used to optimize those candidates by modifying their structure and properties to increase their effectiveness and reduce their toxicity.

Autoencoders can be a valuable tool in the drug discovery process by helping to identify and optimize potential drug targets and candidates and by providing a means of analyzing and interpreting large datasets of biological and chemical information.

Predictive Maintenance in Healthcare

Predictive maintenance in healthcare refers to using predictive analytics and machine learning techniques to identify and predict potential failures or issues in healthcare equipment or systems before they occur, preventing costly and disruptive downtime and ensuring that equipment operates optimally.

Autoencoders can be helpful for predictive maintenance in healthcare because they can identify patterns and anomalies in large datasets that may not be easily detectable by humans.

One real-world application of autoencoders in bioinformatics is predicting hospital equipment failures. For example, an autoencoder could be trained on a dataset of equipment maintenance records, including information about past failures and repairs.

By learning the patterns and features associated with equipment failures, the autoencoder could predict when a particular piece of equipment will likely fail. This prediction could then be used to schedule preventive maintenance or repair work, helping to prevent unexpected downtime and ensuring that equipment is operating at optimal performance.

Other potential applications of autoencoders in healthcare include the prediction of patient outcomes, the identification of biomarkers for disease diagnosis, and the prediction of drug efficacy and side effects.

Conclusion and Future Directions

Limitations of Autoencoders

Autoencoders can be used for various applications in bioinformatics, including dimensionality reduction, feature learning, and anomaly detection. However, one should consider several limitations to using autoencoders in bioinformatics.

Limited representation ability

Autoencoders are limited in their ability to represent complex data structures and thus may not correctly capture the underlying relationships in the data. This limitation can lead to poor performance when reconstructing the original data or extracting valuable features.

Sensitivity to noise

Autoencoders are sensitive to noise in the input data and can be easily thrown off by outliers or other anomalous data points, leading to poor reconstruction quality and may hinder the ability of the autoencoder to learn valuable features.

Limited generalization

Autoencoders may not generalize well to new data, especially if the training data is small or not representative of the overall distribution of the data. Thie Limited generalization can lead to poor performance on test data or when applied to new datasets.

Training difficulties

Autoencoders can be challenging to train, especially for large datasets or datasets with complex structures. The training can require a lot of time and computational resources and may not always lead to satisfactory results.

Lack of interpretability

Autoencoders are not typically designed to be interpretable, and it can be challenging to understand what features the model has learned or how it is making decisions. The lack of interpretability can make understanding the results of an autoencoder challenging and may limit its usefulness in specific applications.

Overall, while autoencoders can be helpful tools for specific tasks in bioinformatics, it is essential to consider their limitations and evaluate whether they are the most appropriate method for a given problem.

Emerging Applications and Research in Autoencoders

Autoencoders are used for learning efficient data encodings in an unsupervised manner. Autoencoders have been applied to a range of emerging research areas, including:

Protein structure prediction

Autoencoders have been used to predict 3D protein structures from their amino acid sequences. This is challenging, as many possible configurations exist for a protein's structure. The encoded representation learned by the autoencoder can capture critical structural features that can be used to make more accurate predictions.

Gene expression analysis

Autoencoders have been used to analyze gene expression data, which reflects the levels of expression of different genes in different tissues or cell types. By learning an efficient encoding of the gene expression data, autoencoders can identify patterns and correlations that may be relevant to the function of the genes and the underlying biological processes.

Drug discovery

Autoencoders have been applied to the discovery of new drugs by learning to predict the binding affinity of small molecules to proteins, which is an essential factor in the effectiveness of a drug. By training autoencoders on large datasets of known protein-ligand binding affinities, it is possible to generate new molecules with predicted high binding affinities that can be tested for their potential as drugs.

Medical imaging

Autoencoders have also been used to analyze medical images, for example, CT scans and MRIs, to identify patterns and features indicative of specific diseases or conditions. For instance, autoencoders have been used to classify the stage of breast cancer based on mammogram images and to predict the presence of abnormalities in brain MRIs.

Autoencoders have been applied to natural languages processing tasks, such as text classification and machine translation, by learning to encode the input text in a lower-dimensional space and then decode it back to the original text.

Autoencoders have demonstrated outstanding potential for applications in bioinformatics and other fields. Their use will continue to expand as research in this area advances.

Final Thoughts and Conclusion

Proceed to the next lecture: Generative adversarial networks for generating synthetic data in bioinformatics